|

|

This topic comprises 3 pages: 1 2 3

|

|

Author

|

Topic: WTH? Quality passable for 4K at 10mbit/s????????

|

Julio Roberto

Jedi Master Film Handler

Posts: 938

From: Madrid, Madrid, Spain

Registered: Oct 2008

|

posted 04-23-2009 05:23 PM

posted 04-23-2009 05:23 PM

OK, this is one of those potentially paradigm shift news that we need to sit down and wait on it for a while.

The RED company, a bunch of guys known for making the cameras used in films like the recent Knowing, have been working for a while on a couple of cool products.

Well, it seems that today they showed to a bunch of respected industry people a piece of their upcoming "Red-ray" product, which could be thought as a "DVD player" but geared towards 4K "quality" material.

http://www.red.com/nab/redray/

Now, please, bear with me as the extend of these claims and demostration are staggering.

First a bit of background. MB/s, with uppercase "B" means mega bytes per second, while Mb/s, with lowercase "b", means mega bit per second. As we all know, a byte is 8 bits, or 8 times a greater "amount of information".

Digital cinema is distributed as digital files which can not exceed, either for 2K-24fps, 2K-48fps (3D) or 4K the data rate of 250Mbit/s.

As a result, your regular movie displayed in a theater, whether 2K, 3D or 4K will always take a maximun of aprox. 225GB of hard drive space (depending on the duration of the movie, of course). Also, the actual file may be longer if it contains several language tracks or whatever, but the amount of "information" that goes to the projector max'es out at 250Mb/s.

Now, DCinema files are compressed quite INefficiently by using M-JPEG2K compression. What this means, is that you could get THE SAME QUALITY by using more powerful compression algorithms and the size could be reduced to AT LEAST 1/8 of what it is, but the servers would have to be far more powerful (=more expensive).

DCI settled the specs for 12bits JPEG2K because that's the only suitable (i.e. from patents and development point of view, etc) codec that was available at the time in a cheap and convenient way for "regular" (i.e. not too powerful) servers. It was a good choice, but not "the best technologically possible" choice.

So with a "good codec" and plenty of processing power, today, we could fit the same quality as DCinema (again, 2K, 4K or 3D, it all uses the same maximun data rate) or, at least ("easily"), 35Mb/s.

This can easily be checked agains another similar format, blu-ray, which uses roughly the same resolution as 2K (i.e. 1920x1080 24fps), and gives similar results (very good looking images). Blu-ray only has to encode 8 bits instead of 12bits and only 2/3 the "color information".

Well, blu-ray, which (often) uses a pretty good compression codec (i.e. more efficient than DCinema), uses a maximun of around 54Mb/s. This includes multiple sound channels (i.e. compressed 5.1 sound).

So I guess we can all agree that you can get really good, basically 2K quality images (forget sound), at data rates of about 50Mb/s with "good commercial or home technology", like blu-ray or Dcinema players.

Well, here comes the big news.

A respected company prepared "4K" material (not true 4K, but 4K bayer, something close to 3.2K perhaps, but certainly over 2.7K) uncompressed, taking about a data rate of 1.3GB/s (notice the uppercase B and that we are talking giga bytes and not mega bytes per second).

They compressed it with production (adquisition) wavelet-based compression redcode to about 450MBs.

This is basically what would arrive at a post production facility to edit the movie with. We lowered GB to MB, but still, 450MB (uppercase) against the final result seen in cinemas at 250Mb (lowercase, which, again, means 8 times less).

Well, they displayed side-by-side and one-after-the-other the "master" 4K 450MB/s file with the same one but encoded in red-ray, their new product, at 10Mb/s ... that's right, lowercase "b" and "M" ... that is, 10 megabit per second or only about 20% more than regular DVD and just about the same as home DV video data rates.

And guess what?

Industry people couldn't believe their eyes.

They couldn't see significant differences between the master quality 4K projection and the absurdely compressed ones coming from the red-ray disc.

That means that a whole image visually undistinguisable from better than DCinema 4K projection can be seen from a file that's only about 8GB in size for your average movie.

We go from DCinema's 4K JPEG2K 250Mb/s to Red-ray 10Mb/s and the quality (so it's claimed) visually remains not the same, but better.

Now, I *AM* knowledgeable in compression technology, and even was an advisor to Compuserve on the implementation of Jpeg when it was born (I had developed the fastest .GIF decompressor at the time, which was the image format of choice before JPEG was developed). I'm familiar with Shannon theories of information, entropy encoding, DCT and wavelets.

I know that if inter-frame redundancy was used in DCI compression, the same quality level could be achieved with about 30-50Mb/s, down from the current-but-inneficient 250Mb/s, again, even for 4K movies.

But to go all the way down to 10Mb/s would be truly amazing.

So the claim is:

Red-ray can display very good looking 4K-like images at around 10Mb/s and, thus, off a simple and "cheap" DVD mechanism with a whole movie, if it's not too long, fitting in one DVD.

I, for one, remain sceptical, as this would be about at least a 4-fold of what I believe current technology (or even physics itself) would allow for most types of natural-looking but rich detail images, but the claim is that bold and backed up by witnesses and industry people, so we must take it in account for the time being.

If this is "confirmed" etc, it could mean that Cinema servers and blu-rays could become "obsolete" in the sense that a 4K player capable of taking a $1 disc and putting out an image of equivalent (or superior) quality from DCI 4K could be bought for cheap.

Of course, whether hollywood or anybody would support such a format, it's another matter, as it is the price tag that this product may carry which would be different if they target domestic market, where it would be only a hundred $ or two, or professional products, where they could demand up to 5 grand for the machine.

Probably the later, but who knows.

Perhaps I should change the title of this thread to: movie studios start sending their 4K films on a 8GB pendrive instead of on 100GB files on hard drives. Or maybe as an email attachment ![[Razz]](tongue.gif)

| IP: Logged

|

|

|

|

Julio Roberto

Jedi Master Film Handler

Posts: 938

From: Madrid, Madrid, Spain

Registered: Oct 2008

|

posted 04-23-2009 08:43 PM

posted 04-23-2009 08:43 PM

I agree, Mark.

I have also seen Showscan. There is (at least it was there some 10 years ago) an attraction in Madrid's Parque de Atracciones that used the system. I was not so much impressed, but that's just me.

I.e. seen plenty of large format: 70mm 60fps I didn't find all that realistic looking, probably 'cos the scratches etc on the film. Then again, maybe that's just me being picky.

I also hate when a (giant) hair gets stuck somewhere in the Imax optical path ![[Big Grin]](biggrin.gif)

The obsession with counting pixels doesn't take in account that, under the usual viewing conditions (i.e. a little far away from the "screen"), our visual system does NOT allow for the high level detail to be percieved and, thus, 2K or 4K or Imax, it all looks the EXACT same, as our eyes are the limiting factor.

Of course, those sitting in the first rows or examining the frames under a microscope for pixels, see a different thing, and for them, pixel amount is important.

The space between pixels, fixed-grid moirés and other sampling artifacts etc, may also be an important factor in perceived quality, rather than detail.

As I said before, I feel 2K is fine and actually just about "as good as it gets" for those sitting in the back 2 or 3 rows of an average theater.

But I do believe that 35mm true-film ("3-perf" flat or s35 originated scope), which we are trying to replace, is a bit above (true) 2K in amount of detail conveyed and, thus, since we are trying to replace it or improve upon it, we should go for something better.

BTW: the RED people say the Red-ray player will go for under $1000 and be available by the end of the year. ![[Eek!]](eek.gif)

[ 04-23-2009, 09:56 PM: Message edited by: Julio Roberto ]

| IP: Logged

|

|

Julio Roberto

Jedi Master Film Handler

Posts: 938

From: Madrid, Madrid, Spain

Registered: Oct 2008

|

posted 04-25-2009 11:39 AM

posted 04-25-2009 11:39 AM

I hope it's ok to reply to myself, as this is a "new" piece of information.

http://visualnary.com/tag/red-ray

[link to small article on the red-ray demonstration]

quote:

So RED just had their first public showing of their REDRay magic box. Some compression wizzardry made it possible to compress 4k video by the factor of 750 - resulting in 4k footage at a datarate that is half of standard definition miniDV. Basically you get a picture way, way, way better than HD at a fraction of BluRay’s data rate. This might just turn the whole delivery upside down, as it will enable distribution of high quality films via standard broadband. Also, digital cinema will not need proprietary distribution technology, because a feature film will fit on a standard DVD-R.

Some "cellphone" video from parts of the Red-party event:

http://es.justin.tv/clip/816cd7fb05b3a74b

I'm sure the reality is not going to live up to the hype, but it also won't be bad.

I can use a format that can offer a good-looking, very high resolution passable for 4K, 10-bits color display format for the price of red-ray player (under $1000) by year's end on $1 DVD-R discs I can burn at home.

| IP: Logged

|

|

|

|

Julio Roberto

Jedi Master Film Handler

Posts: 938

From: Madrid, Madrid, Spain

Registered: Oct 2008

|

posted 04-25-2009 02:01 PM

posted 04-25-2009 02:01 PM

Well, RAW doesn't automatically equal un-compressed, and, actually, all RED cameras usually shoot to compressed RAW to memory cards or discs (with wavelet-based redcode).

It's just that they compress the RAW, instead of compressing a demosaic'ed and white balanced RGB, i.e.

Trying to work with 4K (or even 2K) uncompressed is not very practical and totally unneccesary. Of course, on certain occasions like resolution tests or special effects work, it may be used.

DP's may or may not (pbbly not ![[Wink]](wink.gif) ) want to watch the 4K pictures out of a heavily compressed redray, but I can assure you the editors will want to. Desperately. ) want to watch the 4K pictures out of a heavily compressed redray, but I can assure you the editors will want to. Desperately.

I'm also sceptical that more than about 2.5K of detail actually survives this process. But I KNOW that, for most images, higher than visually comparable uncompressed-2K level is attainable at those data rates for many types of images and situations.

You have to realize that there is actually very little detail to be seen at normal viewing distances on your normal-sized displays and screens that about 3K won't cover.

As everyone in the industry says: with 4K all you get is a little under 3K of detail and 1K of noise.

Compression is great at figuring out how to throw away the noise and keep the detail. Otherwise, DCinema 4K (or 2K) files wouldn't be the aprox. 100GB they are now. ![[Razz]](tongue.gif)

| IP: Logged

|

|

|

|

|

|

Julio Roberto

Jedi Master Film Handler

Posts: 938

From: Madrid, Madrid, Spain

Registered: Oct 2008

|

posted 04-25-2009 03:46 PM

posted 04-25-2009 03:46 PM

Yeah, that's why we should differenciate here (and I always try to speak of) "true-2K" or "true-4K" and whatever formats call themselves that.

As I've said before, it's very often that a movie originates in say s35 film, is scanned (uncompressed) at 10-bit log 2048x856, to then be compressed with M-JPEG2K-12bits to around 200Mb/s to be shown "anamorphic" on DCI projectors.

Obviously that's not the best a 2K system can work.

But new cameras coming out this year are all well above 4K-bayer (smallest one being 5K-bayer), and up to 28K bayer, with 9K-bayer being nice-and-cheap. A 9K-bayer camera can probably give the detail of a true-4K camera under most conditions, and of a 3K camera under all conditions. Even the RED is receiving an upgrade to 4.5K-bayer.

In another words, true-4K may not be all that common here today, just like not even true-2K is that common, but will be very soon and, regardless, resolutions of over true-2K (but below 4K) are readily obtainable with today's scanners and cameras, so it wouldn't hurt to have projectors of over 2K.

I insist that, if TI had a 4K projector for a reasonable price, everybody in this board will be laughing at the prospect of replacing 35mm with 2K and calling it equal to HD-for-home-use-only.

Now a cheap system reproduction system (redray, $1000, up to 4K-10bits) could start showing up, together with a cheap camera (small Scarlett, 3K-bayer, $3700) which could put a "home" system at a quality level equal or perhaps superior to DCinema 2K in the "house" to shoot your kid's birthday with.

Of course, a 4K home projector or monitor are still expensive (i.e. JVC DLA-SH4K), but this may change soon if a format that can make use of it shows up. At least, it would be interesting hooking it up to something like the philips cinema 21:9 (4000€)

An all DCinema needs to out-do 35mm under normal circunstances, is 3K. 4K is overkill and (true)4K would look like overkill-imax to everyone in the theater except for the first 2 or 3 rows.

To me, it's obvious that Redray will not contain 4K of image detail, but that doesn't mean that the tests they have run aren't true: when people are shown a master level 4K (somewhere between true uncompressed 2.7k and 3.2K) and a redray 4K copy, under normal non-optimal viewing conditions, most can not see significant differences.

If you want the short answer: for me, 35mm can be replaced with a (theoretical) true-3K system just fine. 4K is overkill, but hey, if it's here, then why not?

2K is just a tad short, but ok often times (as a replacement for 35mm film).

What Mark says about the screen-door effect (pixel fill factor) is true. For whatever reason, we see an 35mm image (say slightly underexposed) full of "black random dots everywhere" (film grain) and we think it's fine and "beautiful".

We see one with zero noise where there are fixed-separation "black lines" (screen door), and we find it objectionable (screen perforations making it worse not withstanding).

Why? They can both be considered "noise" at worse, except that one is fixed and the other jumps randomly on the screen.

Well, we are aesthetically drawn to forgive film's noise because we are used to see it (and ignore it) and it has always been there. In 10 years (pretending 2K DLP stays), nobody will "notice" anymore screen doors as we would have learned to take them for granted and ignore them.

We equal pixel-separation with lesser amount of information while we totally obviate that film grain IS noise and not information. We see a noise-free image and think: "something is missing, there must be a lack of detail". We see a grainy (noisy) film image and think: "Oh, look, how beautiful ... there must be tons of detail in all that grain".

But that's not often the case.

| IP: Logged

|

|

|

|

|

|

Julio Roberto

Jedi Master Film Handler

Posts: 938

From: Madrid, Madrid, Spain

Registered: Oct 2008

|

posted 04-25-2009 05:54 PM

posted 04-25-2009 05:54 PM

Just to clarify myself, when I talk about replacing 35mm with a (true) around 3K theoretical system, I'm talking about your average quality, release print for cropped 1.85 film, not a pristine full frame S35 negative, i.e.

Also, if one wanted to properly obtain 3K with a fixed grid system from a random (grain) one like when scanning film, it would have to be scanned at 6K at least. Just don't confuse optimal scanning resolution with "true detail".

If you have true 3K detail and it's not spatially situated 1:1 with your adquisition device (camera or scanner), you can not capture it at 3K and will get plenty of ugly aliasing ![[Smile]](smile.gif)

Once you get to 4K, you are at about the limit of the eye resolution under most normal viewing conditions in the cinema.

Just like we can think of the eye as being two different visual systems, one for the "day" when there is plenty of light which yields rich colors and another one that becomes more prevalent at low light levels (night vision) and is more "black and white", we can also think of our eyes as having two detail/spatial frequency transfer functions: one for near-field work and one for far-field.

When we sit very close to our computer monitor or we are repairing a machine, we move our eyes pretty close to the subject of interest, cross our eyes slightly so that both eyes converge on the same point, and scrutinize the image for high frequency detail. When we get close to a cinema screen (or sit in the first row) or when we look at a frame of a print close by, we see one thing.

When we sit a few feet far away from our TV or cinema screen or whatever, we enter a different visual realm and we are no longer able to see that detail, and other, more important low-frequency spatial detail becomes our most important visual stimulus.

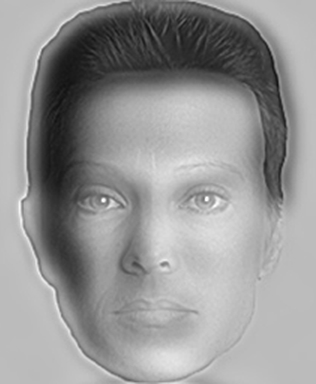

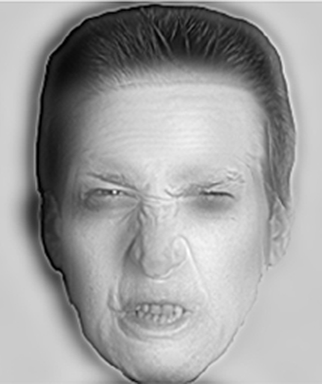

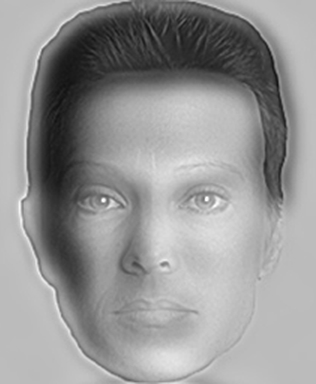

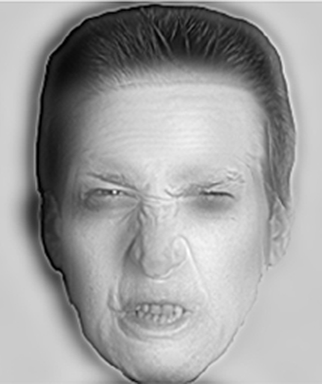

A dramatic demostration are the pictures I'm placing here below.

Get close to your computer screen, a bit more than normal, say 30cm away (1 foot) away. Look at the pictures closely for as long as you want. The top one is of a "pretty woman". The one underneath if of an "ugly old man". No matter how hard you look at them, that's what they are and what you can see.

Now get up and move away from your computer monitor about 6, 7 or 8 steps (about 4 meters, 13 feet). What you will see is that now the top picture is of an angry man, and the bottom one of a "pretty woman". If you don't see it, take another step back (I hope there is enough room between your monitor and your back wall in your room ![[Wink]](wink.gif) ) )

No matter how hard you try to see the other picture, you just don't see it. You can't.

Depending on your monitor size, etc, you may have to go a step or two farther away, etc.

Now slowly walk back towards your monitor while looking at the pictures. At some distance, the faces will start to switch again and the pretty woman becomes an ugly man and vice-versa. Take a step or two closer and scrutinize the images all you want. You can no longer see the other image, and no matter how hard you try, the images are now the complete reverse to what you were seeing before.

It's totally different what you can see very close by "near-field" from "normal cinema viewing distances" far field.

And thanks to this, compression works wonderfully ![[Razz]](tongue.gif) . .

But also thanks to this, Imax wastes like 4 times the information stored since nobody can see it (except, perhaps, some people in the front row with 20/20 vision and no glasses or contact lenses) or those examining the frames under a microscope. It's just goes to waste. Imax is overkill.

We shouldn't obsess with pixel count, etc, but concentrate on what we can see normally and what bothers us (screen door, strobe, i.e.) and what doesn't (some film grain).

What doesn't mean that I consider 2K a bit short for a good 35mm replacement and it should be at least 3K ![[Big Grin]](biggrin.gif) True-4K would be a luxury, but why not? We are almost there and the digital revolution has just started. Think 5 years from now when those Christies and Barcos are half paid-off. True-4K would be a luxury, but why not? We are almost there and the digital revolution has just started. Think 5 years from now when those Christies and Barcos are half paid-off.

Steve, there has been experiments and studies on projected large images resolution and percieved detail, limiting resolution, etc. If you extrapolate the results, I think you can reach the conclusion that (true, well done) 4K is about all the information most people can see in your average theater enviroment except for those sitting in the first couple of rows, who are the only ones who could tell the difference between something like Imax and our theoretical 4K system. If they have perfect eyesight, that is.

[ 04-25-2009, 06:58 PM: Message edited by: Julio Roberto ]

| IP: Logged

|

|

Greg Anderson

Jedi Master Film Handler

Posts: 766

From: Ogden Valley, Utah

Registered: Nov 1999

|

posted 04-25-2009 09:23 PM

posted 04-25-2009 09:23 PM

I was at NAB this year and was disappointed that RED didn’t have a booth. So, they held a private unveiling of their new Red Ray technology but they didn’t do anything on the general, showroom floor. I wonder why not.

Meanwhile, I enjoyed NHK’s presentation of 8K projection with 22.2-channel sound. I think they also said it was 60 frames per second (not fields). And, amazingly enough, they’ve already done tests where they’ve actually broadcast this stuff… over the air! I’m a sucker for ultra-sharp pictures with deep focus (rather than grainy pictures with artistic, overly-shallow depth of field). Most of the stuff they showed (including night, exterior stuff) featured deep focus and I loved it! The fun of 8K is that it really seems like you’re looking through a giant window. So it seemed more “realistic” than pretty much every IMAX and/or 3-D presentation I’ve seen. But the sound was overkill.

My new, favorite “buzz-word” is 8K.

I can't imagine anyone shooting a full feature in this format. It's just too much detail. Traditional make-up would be a problem and the audience will be able to see every last flaw in a "beautiful person's" face. But perhaps for documentaries and other, traditional IMAX stuff, this 8K thing gets my vote.

| IP: Logged

|

|

Bobby Henderson

"Ask me about Trajan."

Posts: 10973

From: Lawton, OK, USA

Registered: Apr 2001

|

posted 04-25-2009 10:56 PM

posted 04-25-2009 10:56 PM

Motion graphics and effects compositing software can selectively blur away "crow's feet" around the eyes and other crags on an actor's face. The same tools are often used to cover up things that don't need to be seen in the frame (wires holding kung fu actors in the air, cables, boom mics, etc.).

Principal photography on any movie should be shot with the best levels of quality the budget will allow. If the footage needs to be grunged up with grain, desaturated or shifted to a certain color scheme that can happen in post.

I like deep focus photography, but there's nothing wrong with very shallow DOF photography either when it makes sense for that particular camera shot. Every creative option should be available to the filmmaker.

As to the original topic, the 4K thing at only 10 million bits per second sounds very suspicious. And the arguments about needing only so many pixels is a pretty tired worn out one. It's based on the shaky notion that viewers just need to watch the material from a far distance or on a more modest screen size. The imagery needs to be produced with the most demanding viewing situation in mind.

Take a vehicle wrap graphic for instance. If I use the same "people are only going to view this from far away" ethic the full sized wrap could be composed at a resolution of only 8 to 16 pixels per inch. But then you get to explain the vehicle's owner why the wrap graphic looks like jaggy shit to him when he is grabbing hold of the car door handle. 72ppi at full size is about the lowest resolution I can get away with using. It would be nice to be able to design in lower resolutions. The computer wouldn't have to choke from performance demands and a single vehicle wrap project wouldn't be consuming upwards of 20GB of hard disc space.

The PDF Brad linked seemed like a sales pitch for the Panavision Genesis camera, where it did damage control for recording only a 1080p based image. They knock IMAX cameras with their 4K grid test, but let's see the same test run on the Genesis camera. Better yet, rotate that grid of lines by 15° or 45° and see how quickly that 1080p pixel grid goes gray. It will go gray a LOT earlier than the IMAX camera. Further, the angled lines will get jaggy and then produce nasty moiré problems very early on before the whole grid test goes gray.

| IP: Logged

|

|

Julio Roberto

Jedi Master Film Handler

Posts: 938

From: Madrid, Madrid, Spain

Registered: Oct 2008

|

posted 04-26-2009 05:35 AM

posted 04-26-2009 05:35 AM

I agree that, the more resolution you have, the "better".

As long as it's "free".

Since you usually have to pay dearly for it once you past a point, whether in storage space, bandwidth, projection technology or whatever, I think it's better to concentrate on what you can actually see when you are not scrutinizing the image with a magnifier, but under normal viewing conditions for the average person, which in theater enviroment means people sitting down at a fair distance from a not-too-large screen.

Once that part is solved, you can start adding even more detail technology and price permitting for those sitting close by or willing to get up from their seats and walk toward the screen to see the subtleties of Angelina Jolie's eyes capillary vessels.

I never exactly considered Imax as "better" as it was just "more of the same" (i.e. take your average piece of 35mm film and just put 10 pieces together) and the price was desproportionately high (i.e. you could buy 10 projectors and 10 35mm prints and it was cheaper).

For me, "better" is not "bigger and more expensive". Otherwise, just take a piece of medium format film 150mm across, run it at "20 perforations", call it "macro-imax" and it's "better".

It's not "better" than 35mm film ... it is exactly the same as 35mm film except that you used 150mm of film instead of 35 and thus, you can make the projected result proportionately bigger at the same resulting "resolution".

If you use twice the (size of) film and it costs 2.5x more to make (i.e. size of lenses), then it's not "better". If it costs 1.9x to make and has 2x the "quality", then it starts to be better in my book.

It's the same with digital. Do we have a problem with 2K? No problem. We put 16 projectors together in perfect synch (it can be done), shoot in imax and scan at 16K (it can be done).

Done. Digital is now better than 35mm.

But it would be way, way too expensive.

"Better" is when its the same-or-better quality and also about the same or cheaper cost.

Digital is still not "better" than film. But there is little doubt it will get there in no time with cameras and post production coming in fast. What's worse, from my point of view, is the projection part, as it is a bit inferior to film and far more expensive than film projectors.

Now, it compensates by saving the print costs. A 35mm projector is only part of the system, as it needs the film print to run it. Your average theater projector might use up to say $25,000 a year in prints to work, while the digital projector basically takes $50 in "print costs" to run. So over the life of a 35mm projector of 10 years, it pbbly costs say $30,000 down for the projector/platter itself and $250,000 in prints to keep it projecting movies for 10 years (a new one every 2 weeks, at $1000/print). Lamp costs are similar between digital/35mm.

The thing is that theater owners don't see print cost as "their cost" directly, as distributors advance it and cover it up with their percentages spreaded from the pool of all exhibitors.

And I believe it's distributors who should either lower their take for digital or pay for most of it through VPF, as they are the ones saving the money of the prints.

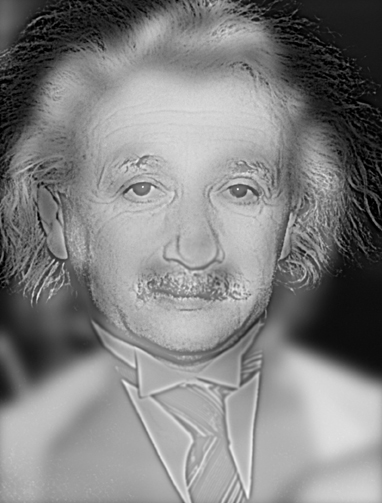

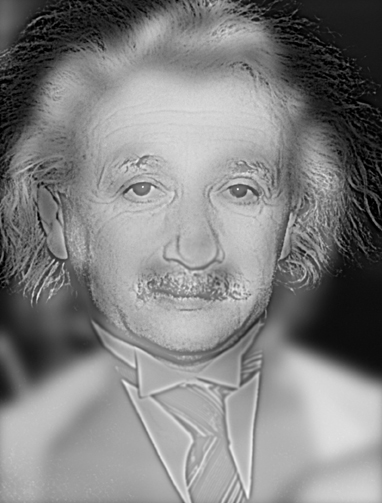

Here is another dramatic example. Get a bit closer to your computer screen than normal, say 1' (30cm) away. In this picture, you can see Albert Einstein from near by. Get up and walk away from your screen about 10 steps (about 5 meters or 16 feet, I hope your room is big enough). Now, who do you see smiling instead? (if it's not working 100% for you, take an additional step or two back). Walk slowly towards the screen and, as you are getting closer, you'll see Einstein again.

There is detail you just can't see unless you are really close to the subject (i.e. just 2-4 feet away), so it doesn't need to be there if it's not going to get used. If it is, well, even better. But if it isn't, it's not all that important as most people won't see it under most conditions if they are not allowed to get that close to the subject/screen.

The eyes are the limiting resolving factor in a lot of imaging applications. Your own eyes can't see details from your monitor starting some 6 feet away (depending on your monitor size and resolution, of course). Imagine in the back row of a theater (although the screen there is much bigger and high-res, etc).

The angular resolving power of the eye is inferior to (true) 4K resolution up to about 2.5 screen heights, which means for just about everybody in your average theater except, perhaps, those in the first couple of rows with good vision and not wearing glassses or contacts.

8K would allow greater angular coverage (i.e. large screen, sitting close to it, sort of like Imax, about 100º instead of the movie theater usual arrangement of screen-to-spectator ratio average coverage of 55º) before the eye resolving power starts to get larger than the available resolution.

Then we'll have to move to 16K and that would be about a little more than the maximun humans can see w/o having to turn our heads constanly to look around parts of a screen that's larger than our entire field of vision (i.e. we'll never need anything above 16K in non-circlerama 360º like situations).

Imax was always "overengineered" but I guess was done that way because 70mm film was the format that was handily available and it had to run it to 15perf to get an acceptable aspect ratio.

Otherwise, the quality perceived at the distances and angle of vision in use would be about the same running 70mm film vertically at 8 perf. Imax just wastes too much "resolution" nobody can possibly see from a distance. Could've been used for frame rate that, at those sizes, is really important.

Imax 24fps sucked in that department (their 48fps never really took off...).

Obviously I'm all in for super hi-vision 8K 60fps too ![[Wink]](wink.gif)

Which I'm sure will come 25 years from now. Or maybe earlier.

[ 04-26-2009, 06:49 AM: Message edited by: Julio Roberto ]

| IP: Logged

|

|

|

|

All times are Central (GMT -6:00)

|

This topic comprises 3 pages: 1 2 3

|

Powered by Infopop Corporation

UBB.classicTM

6.3.1.2

The Film-Tech Forums are designed for various members related to the cinema industry to express their opinions, viewpoints and testimonials on various products, services and events based upon speculation, personal knowledge and factual information through use, therefore all views represented here allow no liability upon the publishers of this web site and the owners of said views assume no liability for any ill will resulting from these postings. The posts made here are for educational as well as entertainment purposes and as such anyone viewing this portion of the website must accept these views as statements of the author of that opinion

and agrees to release the authors from any and all liability.

|

Home

Home

Products

Products

Store

Store

Forum

Forum

Warehouse

Warehouse

Contact Us

Contact Us

Printer-friendly view of this topic

Printer-friendly view of this topic

![[Razz]](tongue.gif)

![[thumbsup]](graemlins/thumbsup.gif) .

.![[Wink]](wink.gif) . If you and I were doing the testing we'd look incredlous at each other then our jaws would just drop to the floor! The janitor would mop up the slobber later that night

. If you and I were doing the testing we'd look incredlous at each other then our jaws would just drop to the floor! The janitor would mop up the slobber later that night ![[Big Grin]](biggrin.gif) .

.![[Eek!]](eek.gif)

![[Smile]](smile.gif)